The Life Rationing Problem by Vincent A. '17

how do you save a life

Introduction: Keeping up with political news this month has, paradoxically, become a rather depressing pastime of mine. Sometimes, it’s fun to vanish into a world somewhat tangential to reality, somewhat well-defined, somewhat abstract, and entirely distracting. This is a more fleshed out version of a powerpoint literary presentation I gave at Alpha Delta Phi a while ago. So without further ado (keeping in mind that this is all very theoretical and somewhat subjective), let’s dive in, shall we?

We will examine scenarios in which some life must be lost, (a more specific case of the general rationing problem in which some people must lose out on what is being rationed) and will argue for how to resolve the life allocation problem ethically. In particular, we will argue that there is likely no rigorous moral principle that completely solves the problem, but that we can strongly depend on the moral measure of intuition to arrive at a solution.

Careful Formulation

To begin, we will attempt to find a moral principle that solves the problem. If such a principle exists, it should be able to account for differing intuitions in variants of the life-rationing problem. Suppose we simplistically define the problem as, “Given two scenarios, either of which will result in loss of life, what scenario is the more moral option to choose?” The problem is not very interesting, prima facie, if each scenario results in equal—say 1—loss of life. Without further facts, we will be unable to make a meaningful decision. Is this true if we extend the situation to an unequal loss of lives depending on the scenario? Our intuition will be to simply pick the scenario that saves the greatest number of lives, a utilitarian approach at heart, but we will show this is not so straightforward by formulating two variants of the problem:

The Organ Variant: Five people need organs or they will die. A doctor decides to grab a healthy patient who shows up for a checkup. He takes the patient’s organs and saves the five with them.

The Scarce Medicine Variant: Five sick people each need a small dose of medicine or they will die. One other sick person needs a large dose of this medicine or will die as well. The doctor chooses to give the five the small doses rather than the one the large dose.

We intuitively find the organ variant morally impermissible, and the scarce medicine variant morally permissible, despite their equal life-saving outcome. Thus our original moral intuition that we simply pick the case that saves more people—call this the maximum principle— requires refining.

Saving the moral good

The first important thing about the maximum principle is that it operates independently of the specifics of the scenarios, and thus doesn’t care enough about certain properties. What might it care more about, to be more effective? To see this, let’s consider the original uninteresting case where either scenario results in loss of precisely one life. The maximum principle will be unable to choose one over the other in this case. In particular, choosing one life over another will require a concept of deservedness—one person deserving to live over the other—that the maximum principle doesn’t account for. Ethically, the only way the principle might possess a principle of deservedness were if it could give one person greater weight over the other. It seems plausible that such a weight would have to be a moral one. If one scenario involved saving a hardened murderer, the other a humanitarian, then the case seems clearer. Thus, for the maximum principle to be effective in cases of equality, we might refine it to the maximum goodness principle, which calls for the scenario that saves the maximum number of morally good people. In the humanitarian versus murderer situation, the result of this principle is certainly intuitively pleasing. It is also pleasing in the scarce medicine variant. In particular, we will have to assume a sort of equal morality among all people involved, in the absence of any other information, and thus saving the five instead of the one, saves five morally good people as opposed to one morally good person, which satisfies the maximum goodness principle. If however, these five were hardened murderers, and the one was a humanitarian, it doesn’t seem morally repugnant to save the one over the five.

There are however three problems with this principle.

Firstly, it requires that we have an independent system of morality, wherein moral goodness and badness can be defined and assigned. I claim this is necessary however—in particular, if we are to prefer saving a person over another in a morally-directed life-rationing problem, a relevant weighting to consider seems to be their individual moralities. The problem of course is that we assume positive morality is an inherently good thing, but there is no truly universal system for gauging which actions are morally positive, and thus which kind of people are morally positive. To not be bogged down by this, we will have to grant the existence of some morally sound system, X, which simultaenously enforces and black-boxes (i.e. hides the internal workings of) moral weightings.

Secondly, there is a convincing argument that can be made for the concept of moral luck, wherein a person’s moral rightness or wrongness might be entirely out of their hands—perhaps a result of genetics or a terrible upbringing, and thus scenarios which necessarily work against them, such as the maximum goodness principle, form a system of double injustice—punishing a person even more for an already bad situation they are unable to control. However, there are two alternatives: choosing the morally positive person to die, which forces the same problem of punishing a person for a situation they are unable to control, and choosing randomly, which might be possible, but turns a blind eye to the significance of positive morality over negative morality. In particular, the existence of moral luck does not mitigate the expected negative consequences associated with a morally repugnant person, and it seems plausible to save the morally positive person, simply because of the value placed on moral positivity, a value that should be independent of moral luck.

Finally, and crucially, the maximum goodness principle does not account for the organ variant. In particular, it would require that we kill the one healthy patient to save the five, if prima facie, we assume equal morality among everyone. But this is intuitively unappealing. Even more problematically, supposing the one patient whose organs we choose to harvest was to some slight degree more morally repugnant than the other five patients, we still find it intuitively problematic to kill him and take his organs. This suggests that the maximum goodness principle must be further refined.

A similar scenario

To make any potential moral principles we come up with even more appealing, we will bring up a third and popular variant of the life-rationing problem:

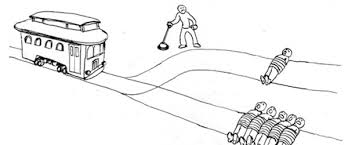

The Train/Trolley Variant: Five people are bound to a rail, and an incoming train approaches. You stand nearby, and hold a switch which if pulled, directs the train to a different rail, which happens to have one person on it. You pull the switch anyway, saving five to kill the one.

This seems morally permissible, and is in line with the result of the maximum goodness principle. However, note the similarity of the train variant with the organ variant. In both instances,

Five people are in danger of death on the one hand.

On the other hand, one person is not strictly in danger of death.

If you do nothing, this one person survives.

If you perform an action (*), you save the five.

What makes the difference then is this action (*), despite these glaring similarities. In one case, you pull a switch. In the other, you harvest the organs. Thus, whatever moral principle may account for these intuitions—and whatever refinement our maximum goodness principle makes—must be dependent solely on some property of these actions. What could this property be? Three candidates come to mind.

1)There is a moral distinction between killing a person (by collecting their organs) and letting them die (by pulling the switch).

2)Killing the patient involves introducing a new threat to him, whereas pulling the switch only redirects an existing threat.

3)Killing the patient involves a severity of action that affects our sensibilities. Pulling a switch is less macabre.

We account for each of these theories in turn. The one person bound to the other rail in the train variant could plausibly attack (1) by insisting that pulling the switch is necessarily a killing action. They can plausibly insist that pulling the switch can’t quite be thought to be letting die, because they don’t die if you don’t pull the switch, and it is your action that is strictly responsible for their death—a marked distinction from, say, letting a “Do Not Resuscitate” patient die by doing nothing—in which your inaction allows their death.

This insistent life-frightened person could similarly attack (2) by analogous argument: that there is no threat to their lives, and that you knowingly introduce this threat by pulling the switch, and directing the train toward them. In the absence of the pulled switch, there is a complete absence of threats.

As for (3), one would have to examine exactly what the grimness or macabre nature of the death has to say about the morality of the cause of death. In particular, if we say that the doctor who kills his patient has done something so reprehensible, we are in effect saying one of two things: a) that the manner by which he killed his patient is reprehensible or b) that the very act of killing his patient is reprehensible. Of course, we are trying to figure out what about killing the patient is so intuitively disturbing, and thus b) makes our argument circular, by claiming that killing the patient is what makes killing the patient reprehensible. On the other hand, a) redirects our question elsewhere, as we are concerned with the very nature of the killing, rather than the mechanics of the act itself. To see this, observe that if we know that pulling the switch will cause the train to have a greater impact on the one person it hits—inflicting a bloody and painful death worse than would be felt by the patient whose organ is harvested—it doesn’t change the result of our intuition that we should pull the switch (supposing not pulling still kills the five). Thus, the severity of death is independent of the problem we are trying to solve.

We have thus considered three rather plausible theories, but none of them have failed to account for the three variants of the life-rationing problem, which would make us seem closer to the thesis statement of this blogpost. I claim we should not give up quite so easily. In particular, we will now proceed by supposing that there is indeed some unknown refinement we can make to the maximum goodness principle that can account for all three variants. We already established that such a refinement must depend on some property of the action performed, and thus we have a new principle, the modified principle: “the scenario to choose is the one that maximizes the moral goodness saved, subject to some constraint Y.”

The modified principle can account for all three variants: the maximum goodness portion of it can account for our intuitions in the train and scarce medicine variant, whereas the “constraint Y” portion of it can account for why we don’t harvest the organs of an innocent person—because such an action disobeys the Y constraint. This is, for now at least, the best we can do, supposing we can find Y. However, we could at this point spend our effort producing a counterexample to the modified principle, despite the vagueness of Y.

How can we accomplish such a task? Well, observe that the modified principle does make a statement: it places a constraint on a specific action—harvesting the organs of a healthy patient against their will to save others—and thus, it suffices to come up with a hypothetical situation in which this seemingly awful action is certainly permissible.

Three attempts

We could be heinously unfair, and say: “How about if you had to choose between forcibly harvesting the organs of five people, versus harvesting the organs of one?” In this case, the prima facie morally plausible action to take is to forcibly harvest the organs of one person, but when either choice forces the Y-violating action, we are being deliberately obtuse. Thus, we must model a situation in which one action to take is the organ harvesting one, but the other option to take preserves the vagueness of Y, and is not necessarily in violation of it, and yet the better action to take would violate Y.

This second attempt could go along the lines of: “Suppose you had a town of 1,000 people, all of whom have mistakenly ingested some deadly poison. Suppose a tourist mistakenly swallowed the only cure, thinking it were a piece of fruit. However, this cure can be extracted if this tourist was killed, and the liquids of his organs secreted.” To save 1,000, it definitely seems plausible to kill the tourist and take his organs, which violates the constraint placed by the modified principle.

However, you could have the following reasonable objection: that the modified principle could theoretically strike a tradeoff between the amount of moral goodness preserved, and the strictness of the Y constraint, and thus in extremes like this, the amount of moral goodness to be preserved by killing the tourist is of such high value that the Y constraint can safely fail in this case, without breaking the modified principle. This certainly holds weight, as if we reduced 1,000 people to just two people, it is not clear that killing the tourist should be permissible. Put differently, we have cheaply taken advantage of the sort of extremism that can make reasonable philosophical arguments crumble. For instance, a reasonable-sounding principle like “Killing a baby is morally wrong” can be made to sound implausible by invoking extremes such as “What if the baby would end up as Hitler, or worse”. Hence, if our modified principle can be allowed to account for extremes, we need a hypothetical example in which the scenarios don’t push the principle to its extremity and force the Y constraint to fail.

I try to do this with a third attempt at a reasonable hypothetical situation that invalidates the modified principle. To construct one, I will attempt to take advantage of the similarities between the train variant and the organ variant. The modification will certainly sound (and be) absurd, but it suffices. Suppose as usual that the train were coming at high speed toward five rail- bound people. Suppose however that instead of a switch, I have a button, which when pushed, transports a random person in the world, say Eric, toward me, rips out his organs, and flings these organs toward the train in such a way that the train derails and crashes, killing no one. Eric of course dies.

It is not clear to me that the pushing the button is morally impermissible, but doing so would violate the modified principle on basis of the Y constraint. If you find this logic fishy, then perhaps you are inclined to think that pushing the button is impermissible, which preserves the Y constraint (since then, you are not harvesting the organs of some innocent fellow), and keeps the modified principle intact. However, this case seems too closely similar to the train variant, whose intuition suggested that pulling the switch (and analogously, pushing the button) was the right call to make.

It thus seems that there is no clear moral principle to account for all three variants, and more generally, the life-rationing problem, but I think we can come up with a theory, not strictly moral, that can account for all three of them. This is in fact a theory we have actually assumed accounted for all three of them so far, one we have based our search for a satisfactory moral principle on: the perceptiveness of our intuition.

Intuition and self-preservation

Thus far, we relied on the fact that our intuitions reacted positively to the train and scarce medicine variants, and negatively to the organ variant to guide our search for moral principles. Does this suggest that we can somehow rely on it even further for a more definitive explanation?

The most crucial problem with such an attempt however, is the problem of self- preservation, in which the results of our intuition when self-preservation is a factor are at odds with the results when it isn’t a factor, all other things being equal. For instance, logic dictates that any principle which accounts for choosing 5 over 1 in the scarce medicine variant, prima facie, should operate independently of their identity (and moreso be directed by their characteristics). In particular, if we gave them the same general features, then the choice to make might have to be, in part at least, a game of numbers, which the maximum goodness (and modified) principle harken to. Our intuition supports this…until we are the ones at risk. Suppose in the scarce medicine variant, you were the one person who had to receive the large dose while five others died. The intuitive reaction to such a problem would be to preserve yourself, to want the large dose regardless of the five. Even if you’re hard-pressed to believe you would make such a selfish—but perhaps not irrational—choice, there are statistics that shed illuminating light on this. For instance, a Time psychology article reported on results of a survey of 147 people asked about a slight variation of the train problem. 90% of them responded intuitively that they would pull the switch, but only about one-third of them would pull if the one person on the rail was someone loved (an extension of self-preservation).

This suggests that any accounting principle should be developed independently of intuition, but this is to ignore the role intuition played in directing our search for such principles, as well as the role intuition plays in even more general moral principles. A simple example, which might at first seem counterintuitive, is to suppose that an alien colony exactly like Earth has to either destroy Earth, or be destroyed by Earth. Despite the self-preservation component of intuition, I don’t think we would deem it intuitively clear that the moral option to take is to destroy the colony (although this might certainly be the case). Even more concretely, if you were newly added to the waiting list for an organ you desperately need, and were bumped 100 spots ahead, jumping over others who had been waiting for years on the sole basis that whoever calls the shots is romantically interested in you, it is intuitive that the moral option to take is to reject such a move, even at the detriment of self-preservation.

Thus, intuition is not a strictly moral dictator as, with the organ variant case, it can push toward you taking the large dose, but it isn’t morally blind either, and seems to be a driving force for a lot of our moral principles. Earlier, I mentioned a moral system X that might be necessary in determining the relative moral weights of people in either scenario of a life-rationing problem. It seems that were we tasked to develop such a system, we would rely very strongly on the measure of our intuition.

To then summarize, a careful and diligent search for a moral principle that accounts for the three variants of the life-rationing problem failed to yield such a principle. It is quite likely that there are things we overlooked, more corners to be excavated, more arguments to be strengthened. On the other hand, it seems that we do not strictly require this moral principle to exist. Our intuition seems to possess some degree of moral aptitude, and in so far as moral principles exist to direct moral actions, such as what decision to make in a life-rationing problem, then thoughtful reliance on this intuition could be a very rational way to go.

**

An Elegant Response

Finally, to close off, I would like to present the most elegant solution to the train variant I’ve ever seen: