If I had a nickel for every class I was taking this semester… by Andi Q. '25

I’d have 4.5 nickels.

I’m a crusty senior now, so I have no fun and sparkly adventures to write about like the first-year bloggers have been doing (at least not for this week). However, what I do have as a senior is the freedom to choose fun elective classes without worrying much about prerequisites or fulfilling major requirements. I love talking about the classes I’m taking because they contribute so much to my enjoyment of MIT, so here’s what I’m taking this semester.

6.S965 (Digital Systems Laboratory II)

This class is the long-awaited sequel to 6.2050 (Digital Systems Laboratory I) – the intro FPGA class I took last fall. 6.S965 is all about revealing the inner workings of the black-box tools we took for granted in 6.2050. For example, much of our work in 6.2050 relied on hardware simulators that magically work; in 6.S965, we spent the first two weeks discussing how they work. We also learn about many techniques in modern digital hardware design that we weren’t smart enough for/didn’t have enough time to cover in 6.2050 – design verification, software-defined radio, and anything else that Joe Steinmeyer (the lecturer) thinks would be cool for us to experiment with. And just like 6.2050, there’s a big final project that I’m excited about, except now we get to use FPGAs that are more than 10x as powerful as the ones we used in 6.2050.

Surprisingly, most of the code we write in 6.S965 is in Python. Although Python may seem like a blasphemous choice for a hardware engineering class (where you’d typically see a low-level programming language like C or Assembly), it makes everything we do so much easier. A complicated testbench that would’ve taken me hours to set up using 6.2050’s infrastructure now takes me about twenty minutes using Python, with much more readable code to boot.

The special FPGAs we use that support Python are called “Pynq” boards. And yes, they are this shade of hot pink in real life.

The “S” in the subject number means that this class is a “special subject” (typically a limited-edition or experimental class about a new or niche research area); in this case, it’s the first time that Joe is offering 6.S965. It’s admittedly not the most polished class (yet); sometimes things01 Even some things that you wouldn't expect to be able to not work, like ethernet cables. just don’t work even though Joe or the official documentation02 It’s actually quite embarrassing for AMD how often this happens. The latest version of Vivado (the software we use to program the FPGAs) completely broke an important feature that has been working for the last 10+ years. And did I mention that Vivado is <em>paid</em> software? said they would, but that’s the standard experience when working with hardware anyway. As a result, the class is much more open-ended and student-driven than other classes I’ve taken at MIT. It’s a lot like what I imagined/hoped MIT classes would be like before I applied.

Several of my friends are taking 6.S965 with me, and Joe is a great lecturer (who also brings home-grown cucumbers to lecturers sometimes). Overall, I’m having a great time in this class.

6.S951 (Modern Mathematical Statistics)

This class is my first statistics class at MIT! I’m still not entirely sure how “mathematical” statistics differs from regular statistics, but I think it’s all about formally proving/disproving statistical results typically presented as empirical facts. For example, if you’ve taken a statistics class before, you may have learned that 30 is the magic number of samples where the central limit theorem kicks in; however, that’s not always true, and we proved that in our latest problem set.

Even Google’s AI gets it wrong! However, I should note that for most real-world data, the magic number 30 does actually work.

6.S951 is by far my hardest class this semester. Many seemingly obvious results are surprisingly difficult to prove formally; sometimes, those “obvious” things aren’t even true at all. It’s extremely mind-bending, but wow is it satisfying when things finally click. I feel like I’ve learned more linear algebra in this class than in 18.06 (the actual linear algebra class), and I finally understand why the matrix trace operator is so important now.

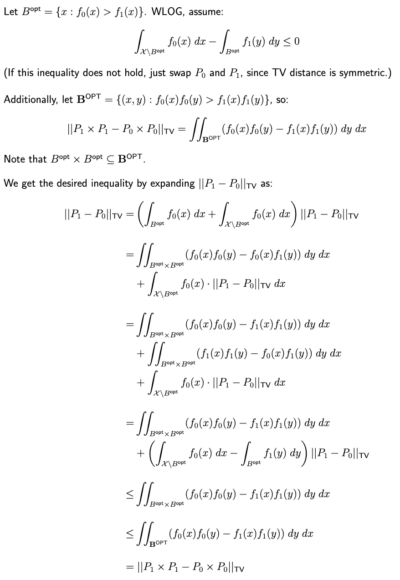

Admittedly, taking 6.S951 as my first statistics class is probably 90% of why it feels so hard. Two of its prerequisite classes are 18.650 (Fundamentals of Statistics) and 18.100 (Real Analysis), and I’ve taken neither. So while the other students (most of whom are PhD students who research statistics) write up super elegant proofs like this:

I write up horrible monstrosities like this:

(It’s still just as correct as the elegant solution above though – I got full credit for this monstrosity.)

Definitely not my brightest idea in hindsight.

Despite the difficulty, taking this class was still a pretty good decision. I can keep up with the content because Professor Stephen Bates explains things well, and he thankfully doesn’t gloss over proofs or definitions he deems “trivial”. Plus, I’ve learned a lot, especially from thinking about analogies that make these unintuitive results make more sense. Aside from 6.S951 being my only class with exams this semester, I have no regrets about taking this class. I might even take the sibling class, 18.656 (Non-Asymptotic Mathematical Statistics) in the spring.

6.5940 (TinyML and Efficient Deep Learning Computing)

This class is the coolest-sounding class I’m taking and the one I most looked forward to… but it turned out to be the most disappointing. Not that the content isn’t cool; quite the opposite – one of our assignments involves getting a large language model (like a smaller version of ChatGPT) to run entirely on a laptop03 This is a big deal because LLMs normally require a small town’s water supply and burning down a chunk of the Amazon rainforest to run. . However, these assignments all boil down to filling in a few lines of code and pressing “run”, and I’d hoped that there’d be more to them than that. The lectures cover so many interesting topics (like custom computing architectures for deep learning), and I wish that more of them would make it into the assignments.

I suppose it isn’t necessarily bad that the assignments are like this though. Machine learning tools like PyTorch have become so good that we only need to write a few lines of code to build powerful machine-learning models. And the assignments would probably be far too complex if we had to implement everything covered in the lectures from scratch. (Imagine if the poor TAs had to help debug 200+ students’ homemade ChatGPT clones…)

Still, I’m enjoying the class because the content is fascinating. It’s a culmination of almost everything I’ve studied at MIT, from computer architecture to machine learning. I think there will even be some quantum mechanics in the final two weeks of the semester! There’s also a final project, which should hopefully be much more exciting than the assignments.

Also, Professor Song Han is possibly the most overpowered professor I’ve had so far at MIT. Almost every lecture is about some new technique that he and his group pioneered in hardware engineering or machine learning. The papers linked to these techniques would often have hundreds of citations too, and have won the best-paper award at whichever prestigious conference they first appeared in. Many of the charts we see in lectures end up being some variation on these two charts:

21M.587 (Fundamentals of Music Processing)

This class is one of the most fun classes I’ve taken at MIT. Not only is it a music class (already a strong indicator of being a great class), but it’s also a class about Fourier transforms, dynamic programming, and linear algebra! The class is taught by Professor Eran Egozy – creator of Guitar Hero and Rock Band, and veteran of the music technology industry. I especially love how he takes abstract algorithms and shows us how they were used to create technologies we use every day, like Shazam’s music recognition and Spotify’s song recommendations. It’s always cool to see real-world applications of things you learn in class; it’s even more cool when your professor is someone who created or worked on those applications.

Another great thing about 21M.587 is how interactive all the algorithms are. It makes understanding them so much easier when you can download an audio file, plug it into the code, and visualize how the algorithm processes the music. We do everything in Python, which makes it easy to implement all of this in just a few lines of code.

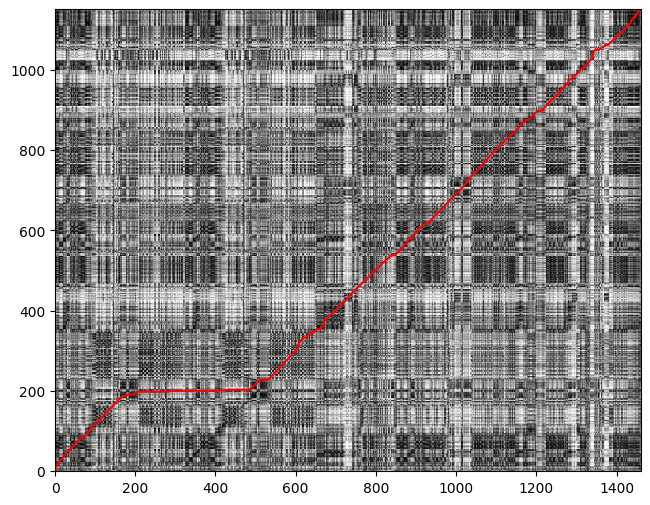

This class doesn’t cover machine-learning approaches to music processing04 There's a new music technology class about this now though! but instead focuses on more “fundamental” signal processing techniques. However, these techniques are still remarkably effective (and often outperform their machine-learning counterparts). For example, in our most recent problem set, I synced up two vastly different recordings of Beethoven’s Moonlight Sonata – one played on a grand piano and the other by the Eight Bit Big Bad – using these fundamental techniques.

A visualization of the synchronization using an algorithm called the “dynamic time warp”. I wish I could put a demo of the synchronized audio in this blog, but I don’t think WordPress would be happy about that.

21M.426 (MIT Wind Ensemble)

Last (but certainly not least), I’m in MITWE again! It still brings me as much joy as it did when I joined the ensemble in my freshman fall semester. That’s why I keep coming back, seven semesters and counting :)

- Even some things that you wouldn't expect to be able to not work, like ethernet cables. back to text ↑

- It’s actually quite embarrassing for AMD how often this happens. The latest version of Vivado (the software we use to program the FPGAs) completely broke an important feature that has been working for the last 10+ years. And did I mention that Vivado is paid software? back to text ↑

- This is a big deal because LLMs normally require a small town’s water supply and burning down a chunk of the Amazon rainforest to run. back to text ↑

- There's a new music technology class about this now though! back to text ↑