classes i’m taking this spring by Fatima A. '25

study abroad edition

after long deliberations and email exchanges with the transfer credit examiners, i registered for what i hope will be the equivalent of 48 units at MIT.

there are some key differences in academics between MIT and here. firstly, people mostly take classes in only their own major. i had the choice to take one class outside my major but decided to stick to math classes only. it was a little intimidating at first, i have never taken only math classes or, as many math classes in the same semester.

the other difference is that there is more room for a self-guided pace. concretely, this means that my classes all have weekly problem sheets or tutorials, which look like problem sets, but we do not have to turn them in every week. instead, there are only one or two courseworks you actually have to submit for each class in the entire term. this is because a lot of your grade is the final exam at the end of the term (in the summer term, technically).

perhaps, a direct impact of having less coursework is less opportunities for collaboration. i might be biased because i don’t know as many people here but i don’t think it is just that.

here are some thoughts on the classes i am taking.

I. Functional Analysis

i originally signed up for this class mostly because the pure math major requires you to take a second analysis class beyond real analysis and i had not fulfilled that requirement yet. in hindsight, it was a really good decision! the professor for this class is a really, really good lecturer so the lectures are very interesting. i am also gaining a new appreciation for analysis. i took real analysis when i was but a confused freshman and so, i never really liked analysis before.

one way to think about dimension is to see how many variables it takes to describe a space. for example, in order to describe any point in the real plane, you can give its x and y coordinate so it is 2 dimensional. we look at finite dimensional spaces in linear algebra courses. roughly speaking, functional analysis is an extension of linear algebra to infinite dimensions where unintuitive things begin to happen. an example of an infinite dimensional space would be the space of polynomials with real coefficients. you would have to give the coefficients of {1, x, x^2, x^3, …} to describe any polynomial, which is an infinite number of variables. (Paige B. ’24 talks about dimensions here and helped me exposit this!)

the course revolves around the properties of complete spaces (which means spaces in which sequences for which subsequent elements eventually get arbitrarily close (Cauchy) have limit points (convergent). think holes. so an example of a not-complete space would be rational numbers. i could take a sequence of rational numbers approaching an irrational number and then, there would be no limit point for this sequence in the rational numbers.)

we talk about linear operators that are continuous, or equivalently, bounded, and their various properties including extending maps from linear subspaces and talk about various notions for convergence using these linear operators.

II. Statistical Theory

i wanted to take more probability and statistics classes, having enjoyed the one introductory course i took in the past and since this is, in some sense, the equivalent of the course people at MIT often take after the introductory probability course, i decided to go for it.

my class started by introducing exponential families (families of statistical models that behave nicely) and then went on to talk about various ways of estimation. essentially, one wants to infer something about the true distribution of the data from the given samples and there are different ways to do this. we talk about the frequentist and the Bayesian way of thinking about statistics. the frequentist way treats the parameter we are looking for as a constant whereas the Bayesian way involves having some prior beliefs or knowledge about the unknown parameter, represented through a distribution (called the prior), and then adjusting your views or beliefs after looking at the data to a new distribution (called the posterior) on the parameter.

III. Geometry of Curves & Surfaces

i have really wanted to take a course in geometry for a while now. the first, shorter part of the course talks about regular curves, their tangent vectors and their curvature. the second part of the course defines regular surfaces and then defines their tangent spaces and the notions of curvatures for surfaces. regular surfaces are “nice” spaces that locally look like the Euclidean plane.

we learned how to take differentials on arbitrary surfaces. because the tangent vectors at a point in a surface are given by derivatives of curves lying on the surface passing through that point, then the differential of a map at a point is defined between the tangent spaces of the two surfaces that the map is defined on.

we talk about the intrinsic and extrinsic notions for curves and surfaces. one can think of these as what someone living on the curve (or surface) would experience versus what someone who is looking at it from the outside knows. think about this example: if you are living on a curve, you will not be able to distinguish between a straight curve versus a very “curvy” curve i.e., all curves are intrinsically flat. this is why we only talk about the extrinsic notion of curvature for curves. another example could be (from The Shape of Space): take a sheet of paper and compare it to if you bent it into a half-cylinder-like shape. if you are a little being living on this sheet of paper, you cannot tell the difference between the two (intrinsic) but if you are looking at the paper in 3-D, you can tell the difference between the two surfaces (extrinsic). so we have extrinsic and intrinsic notions of curvature for surfaces. (may talk about curvature in a later blogpost!)

IV. Mathematical Foundations of Machine Learning

given that the class is with the maths department and is literally called “mathematical foundations,” i should have expected it to be theoretical. and yet.

the class defines learning, goes into theoretical explanations for convergence of different optimization methods etc. we talked about neural networks, gaussian processes, convolutional neural networks and are now talking about variational auto-encoders. i am just throwing words at this point, but the only funny thing out of all of this is seeing KL divergence in class last week (sponsored by my friend, matthew).

this course is different from the other courses because it is coursework only. we had our first coursework due a couple weeks ago and it was ~65% theory and ~35% coding although i still struggled with the coding a lot more. in addition to being bad at it, i just did not like the whole try different things, wait for an eternity for your code to run, then try more things. i was able to get guidance from the TAs though, which was really helpful!

i am glad to have chosen this class because machine learning feels so interdisciplinary. i saw someone’s dissertation on a category-theoretic approach to deep learning (which i have not actually gone through but would like to). apparently, people tried to use ML models for topology to see if they could find useful patterns and invariants of spaces and apparently, they did find some stuff. this is also not something i know very well but i would like to learn more about it at some point!

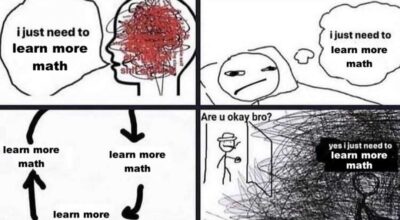

i had been scared at the thought of graduate school and doing only math because i have had at least one HASS every semester at MIT, but this semester has made me more confident that it will be fun! four years seem like such a long time but almost three years in, i feel like there is so much more i want to learn that i just have not had time for. so, although it feels so scary to think about graduate school, i am also very excited to be able to learn a lot more.

i had also been scared of completely forsaking writing poetry if i was not required, for one reason or another, to write it but that has also not been true and it is very reassuring to know that.

ending on this forever relevant meme:

taken from somewhere off twitter. where exactly, i do not remember

(thanks to Matthew H. ’25, Misheel O. ’25 and Paige for comments and ensuring correctness!)