[Guest Post] MIT THINK Scholars – A High School Research Program! by Cami M. '23

From one side of it to the other

Hiii, Audrey C. ‘24 here! I’m briefly infiltrating the blogs to talk about the MIT THINK Scholars Program, which I had the privilege of taking part of during my junior year of high school. Now as a college freshman, I’m on the committee that runs the program (along with Cami, Alan, and other incredibly cool people :)).

If you’re a high school student living in the US with a research or innovation idea, we encourage you to apply to THINK! It’s different from most other high school research competitions in that we only require a proposal, rather than a completed project in the application. We select 6 finalists each year, who will each receive $1,000 in seed funding for their project and mentorship from MIT students and faculty throughout the spring semester.

Applications for the 2021 program are open from Nov 1st, 2020 until Jan 1st, 2021. Head over to think.mit.edu for guidelines and more information.

We’ll be hosting an Instagram live session about THINK through @mitadmissions on Friday 11/6 from 4-5pm EST! Tune in to get your burning questions answered or just chill with us :)

My project idea struck when I was wandering in my local contemporary art museum. I love the serenity of art museums, in which only the (figurative) voices of the artwork and the occasional squeaky footstep penetrate the silence of the gallery. There was an exhibit exploring the concept of home, including one piece that was just a solid black canvas. According to the description, it was supposed to represent an obscured window criticizing the American Dream of home ownership. Well that was a ~little~ hard to see.

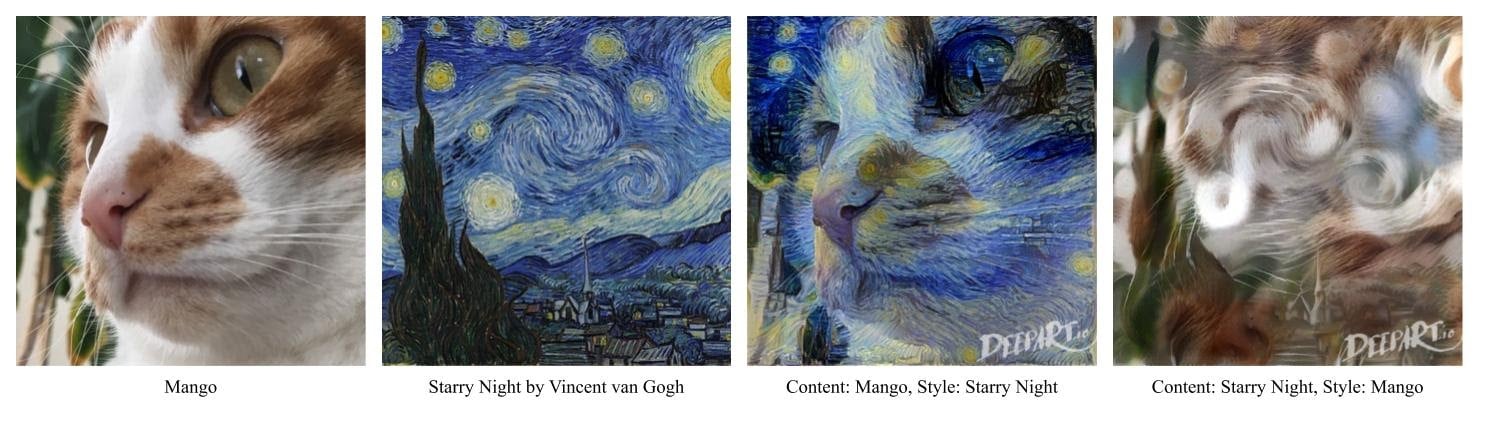

Around that time I first heard of neural style transfers — using neural networks, researchers01 A Neural Algorithm of Artistic Style: https://arxiv.org/abs/1508.06576 were able to transfer the style of one image onto the content of another image. This transforms02 deepart.io hosts a version of the neural style transfer algorithm that you can play around photos into paintings! Such as my lovely cat Mango becoming Starry Night (and vice versa):

1. Mango 2. Starry Night 3. Mango in the style of Starry Night 4. Starry Night in the style of Mango

I stared at the solid black canvas. I wondered if I, a human, couldn’t really understand art, what would computers have to say? If computers could create art, could they possibly also understand art? And so my project of creating what’s basically an AI-powered art critic was born.03 Interestingly, although my project focused on text generation (generating art analysis paragraphs to be more specific), techniques from the neural style transfer paper inspired my proposed methods.

I’m not sure what the 2019 THINK team saw in my proposal but THANK YOU for believing in me! In non-pandemic years, finalists get taken on a weeklong all expenses paid trip to MIT consisting of hanging out with the THINK committee, living with a host’s dorm community, eating way too much free food, talking with researchers and faculty for proposal feedback, and overall experiencing what life is like as an MIT student. Due to the pandemic, the finalist trip this year will unfortunately most likely be virtual. We’re trying our best to recreate as much of the trip as possible and capture the essence of getting a taste of MIT’s vibrant communities and research.

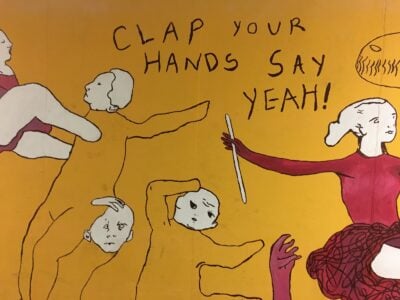

My first impression of MIT during my finalist trip was: wOWOW this place is actually so artsy! I had the notion of MIT being a hardcore tech institute (it’s in the name after all), but I quickly saw that artistic creativity flourishes in every corner of MIT. When I dropped my stuff off at my host’s dorm room in East Campus, I marveled at the student murals that completely lined the halls. Mural content ranged from the realistic to the surrealistic to the ??? This was one of my favorites:

Another Really Cool Artsy Thing I got to see and experience were the Borderline murals. They line the walls of the underground tunnels04 the tunnels were virtualized into a digital community art project by Borderline during CP* (virtual campus preview weekend)! check it out at http://tunnel.mit.edu/ under Building 66 and come to life via animated augmented reality overlays. I’m currently doing zoom MIT from home, but I have a feeling that between painting my future dorm room and contributing to the Borderline murals, my on-campus life will likely involve a good amount of wall painting.

As vibrant and colorful as the art were all the people and the communities that I got to meet. Hanging out with the THINK team and hearing them talk about their MIT lives and everything they were passionate about stirred within me an excitement for… life in general. I know I’m being vague here, being around such passionate people made me excited about having that exhilarating freedom in college to figure out who I am and what I love.

One night during the trip I was working on my physics homework in East Campus and got stuck. So my host got one of her friends to help me out. That interaction lasted only a couple minutes, but I remember it so vividly because the fact that someone was willing to drop everything to teach some random clueless kid was just so heartwarming. I also met another East Campus resident who invited me to lick their salt crystal. Back then, potentially exchanging salivary fluids was not a concern of mine, so my name was added to the list of salt crystal lickers tacked onto their door. I was unnaturally proud to join the salt crystal lickers.

Also just memeing around with the finalists and THINK team and talking about the most random things with my host into the wee hours of the night was fun. I stayed in touch with another finalist because of our shared fascination for Shen Yun billboards (5,000 years of civilization reborn!1!) and we’re good friends to this day.

Working on my project after the finalist trip was one of the most fulfilling things I’ve done. There’s a strange serenity to coding late at night to a mug of hojicha tea and lofi hip hop beats. There’s also a certain thrill in knowing that I’m turning a random idea born out of a solid black rectangle into something ~real~. I learned tons from brainstorming possible solutions to the many but inevitable issues that popped up with my mentors, who I respect immensely. Reading journal papers definitely took some time to get used to, but being able to ask my mentors to explain some fancy looking functions and convoluted technical wording made reading papers less intimidating.

Admittedly there were times when I got frustrated that nothing was working and the output was gobbledygook; like “pessimistically Marxist trod pumpkins” were definitely not the subject of Starry Night. But the artist in me admired that, like Jackson Pollock’s abstract drip paintings, my models’ word salads took on a chaotic beauty of their own. I was fairly sure that this incomprehensible output had something to do with the loss function converging way too quickly,05 Neural networks calculate a loss function to quantify the difference between the generated output (i.e Marxist pumpkins in my case) and the ground truth output (i.e art analysis written by humans). Through fine tuning parts of the neural network, the loss should gradually approach a small number and the generated output should gradually get smaller. But my network's loss kept plummeting to zero almost immediately, despite the output being so bad D: but struggled for a month or two to find out why. Apparently this issue (more formally dubbed mode collapse) is known to be common but difficult to solve. My mentors helped me develop fairly legit ways to fix this issue BUT ALAS it turned out to be something as dumb as me commenting out a line of code and forgetting about it. I felt bad for dragging my mentors through this for a month but props to them for putting up with this :’).

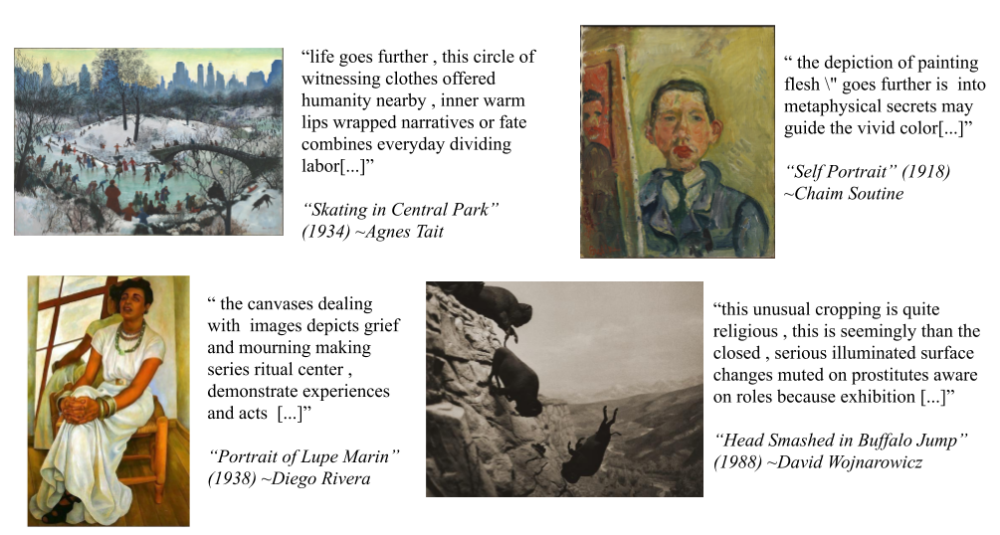

Below this paragraph are the more interesting outputs of the final AI art critic.06 For the nerds out there who are curious about my model: a lot of my proposed methods didn’t work very well in practice and I ended up modifying an unconditional text generation model called LeakGAN (https://arxiv.org/abs/1709.08624) to condition on image features, along with some other changes. “Metaphysical secrets may guide the vivid color” sounds like something I might fluff up an essay with when I have no idea what I’m actually talking about :P. One of my mentors said that he read out loud my model output at a committee meeting like it was slam poetry, so I’ll just call it pseudo slam poetry rather than brainless fluff.

I’d like to think that this project is as much art as it is computer science, since it says something about the messy implications of machines gradually taking over our human world. People create art to express who they are and how they see the world. When human artwork is paired with machine generated text, you tend to forget that this artwork was created by an actual human with human intentions and human experiences. Instead, you wonder what the machine generated text is saying about the human artwork — perhaps art as a function of human creativity is just one big “metaphysical secret” ¯\_(ツ)_/¯. This leads to an interesting place where a human is critically examining the machine’s critical examination. I’m definitely not trying to replace human art critics, since I personally believe there’s something special to human creativity that can’t (and shouldn’t) be replicated by machines. But maybe machines can augment human art analysis. There’s an interesting tension going on here where this AI art critic is both distancing the human and augmenting the human.

It’s incredible how so many things have come full circle since my experience as a finalist — in addition to being on the other side of things for THINK and getting to work alongside people I first met two years ago, one of the researchers I talked to during my finalist trip is now my UROP mentor. I am very grateful for the opportunities THINK gave me, and I’m excited to pay it forward by empowering high school students to make their craziest ideas a reality. Your idea doesn’t need to cure cancer or solve global warming (it certainly can, but mine clearly did not). It just needs to be feasibly completed in a semester, fall within a $1,000 budget, and hopefully excite you. Apply to THINK at think.mit.edu! :D

[addition by cami:

not so gentle reminder that:

We’ll be hosting an Instagram live session about THINK through @mitadmissions on Friday 11/6/20 from 4-5pm EST! Tune in to get your burning questions answered or just chill with us :)

]

- A Neural Algorithm of Artistic Style: https://arxiv.org/abs/1508.06576 back to text ↑

- deepart.io hosts a version of the neural style transfer algorithm that you can play around back to text ↑

- Interestingly, although my project focused on text generation (generating art analysis paragraphs to be more specific), techniques from the neural style transfer paper inspired my proposed methods. back to text ↑

- the tunnels were virtualized into a digital community art project by Borderline during CP* (virtual campus preview weekend)! check it out at http://tunnel.mit.edu/ back to text ↑

- Neural networks calculate a loss function to quantify the difference between the generated output (i.e Marxist pumpkins in my case) and the ground truth output (i.e art analysis written by humans). Through fine tuning parts of the neural network, the loss should gradually approach a small number and the generated output should gradually get smaller. But my network's loss kept plummeting to zero almost immediately, despite the output being so bad D: back to text ↑

- For the nerds out there who are curious about my model: a lot of my proposed methods didn’t work very well in practice and I ended up modifying an unconditional text generation model called LeakGAN (https://arxiv.org/abs/1709.08624) to condition on image features, along with some other changes. back to text ↑